A while ago some colleagues and I were writing a .NET application that fired off web-requests to a third party web-API. The details of the application are unimportant, other than that it was a migration tool which sent data from one third party store to another. There was a lot of data to shift (~9TB) and it needed to be done very quickly. So, we ended up writing a multi-threaded application which could read in many pieces of the stored data concurrently, and fire them off to the third party web-API concurrently.

We wrote a beautiful, elegant and totally thread-safe application and all watched in anticipation as we fired it off for the first time, expecting to see data throughputs the likes of which had never been seen before.

Instead the performance was only slightly better than the first version of our application, which just sent data through one piece at a time in a single thread. After a lot of digging, it turned out that all .NET applications have a restriction on the number of network connections that can be opened. By default this number is two. Fortunately, this number can be changed using the configuration file.

Simply add the following to override this value:

<system.net>

<connectionManagement>

<add address = "*" maxconnection = "100" />

</connectionManagement>

</system.net>

To demonstrate this in action, try running the following .NET console application without overriding the configuration file:

using System;

using System.Net;

using System.Threading;

namespace ConnectionTestApplication

{

public static class Program

{

private const int ThreadCount = 100;

private static volatile int _timesPageGot;

public static void Main()

{

var timerThread = new Thread(TimerThreadMethod);

var getPageThreads = new Thread[ThreadCount];

for (var i = 0; i < ThreadCount; i++)

{

getPageThreads[i] = new Thread(GetPageMethod);

}

timerThread.Start();

for (var i = 0; i < ThreadCount; i++)

{

getPageThreads[i].Start();

}

Console.ReadLine();

}

private static void TimerThreadMethod()

{

var seconds = 0;

while (true)

{

Thread.Sleep(1000);

seconds++;

Console.WriteLine("Seconds: {0}", seconds);

}

}

private static void GetPageMethod()

{

var request = WebRequest.Create("https://en.wikipedia.org/wiki/List_of_law_clerks_of_the_Supreme_Court_of_the_United_States");

using (var response = request.GetResponse())

{

_timesPageGot++;

Console.WriteLine("Page got: {0}", _timesPageGot);

}

}

}

}

Note that this will download what is supposed to be the longest page on Wikipedia (at the time of writing) a whole bunch of times concurrently using threads:

List of law clerks of the Supreme Court of the United States

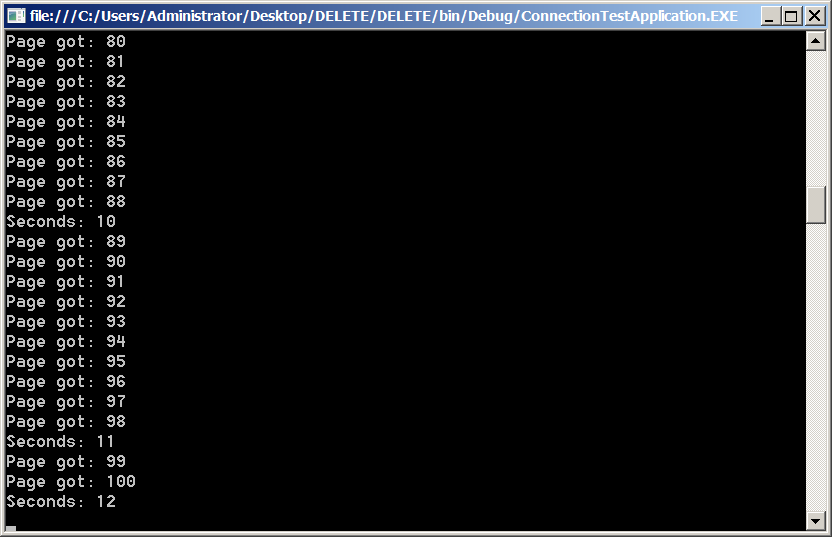

You’ll get something like this on the screen depending on the speed of your internet connection:

Note that it took under 12 seconds to download the page 100 times on my internet connection.

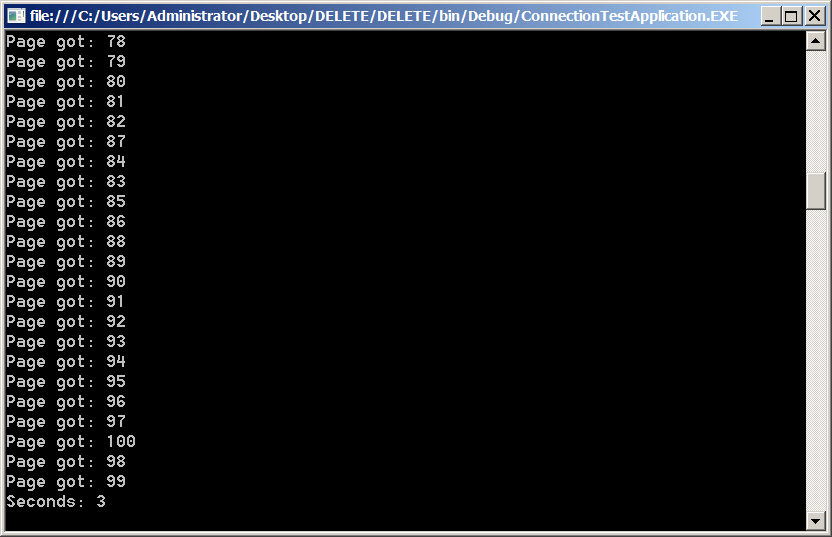

However, with the configuration file override set to allow 100 concurrent connections I get:

This time it took under 3 seconds.

Result!